Fresh Installation

Pre-Installation Checklist

Before you begin, check the following:

- Release 6.3.0 requires at least ESX 6.5, and ESX 6.7 Update 2 is recommended. To install on ESX 6.5, See Installing on ESX 6.5.

- Ensure that your system can connect to the network. You will be asked to provide a DNS Server and a host that can be resolved by the DNS Server and responds to ping. The host can either be an internal host or a public domain host like google.com.

- Deployment type – Enterprise or Service Provider. The Service Provider deployment provides multi-tenancy.

- Whether FIPS should be enabled

- Install type:

- All-in-one with Supervisor only, or

- Cluster with Supervisor and Workers

- Storage type

- Online – Local or NFS or Elasticsearch

- Archive – NFS or HDFS

- Before beginning FortiSIEM deployment, you must configure external storage

- Determine hardware requirements:

| Node | vCPU | RAM | Local Disks |

| Supervisor (All in one) | Minimum – 12 Recommended - 32 |

Minimum

Recommended

|

OS – 25GB OPT – 100GB CMDB – 60GB SVN – 60GB Local Event database – based on need |

| Supervisor (Cluster) | Minimum – 12 Recommended - 32 |

Minimum

Recommended

|

OS – 25GB OPT – 100GB CMDB – 60GB SVN – 60GB

|

| Workers | Minimum – 8 Recommended - 16 |

Minimum – 16GB Recommended – 24GB |

OS – 25GB OPT – 100GB |

| Collector | Minimum – 4 Recommended – 8 ( based on load) |

Minimum – 4GB Recommended – 8GB |

OS – 25GB OPT – 100GB |

Note: compared to FortiSIEM 5.x, you need one more disk (OPT) which provides a cache for FortiSIEM.

For OPT - 100GB, the 100GB disk for /opt will consist of a single disk that will split into 2 partitions, /OPT and swap. The partitions will be created and managed by FortiSIEM when configFSM.sh runs.

Before proceeding to FortiSIEM deployment, you must configure the external storage.

- For NFS deployment, see FortiSIEM - NFS Storage Guide here.

- For Elasticsearch deployment, see FortiSIEM - Elasticsearch Storage Guide here.

All-in-one Installation

This is the simplest installation with a single Virtual Appliance. If storage is external, then you must configure external storage before proceeding with installation.

- Set Network Time Protocol for ESX

- Import FortiSIEM into ESX

- Edit FortiSIEM Hardware Settings

- Start FortiSIEM from the VMware Console

- Configure FortiSIEM via GUI

- Upload the FortiSIEM License

- Choose an Event Database

Set Network Time Protocol for ESX

FortiSIEM needs accurate time. To do this you must enable NTP on the ESX host which FortiSIEM Virtual Appliance is going to be installed.

- Log in to your VCenter and select your ESX host.

- Click the Configure tab.

- Under System, select Time Configuration.

- Click Edit.

- Enter the time zone properties.

- Enter the IP address of the NTP servers to use.

If you do not have an internal NTP server, you can access a publicly available one at http://tf.nist.gov/tf-cgi/servers.cgi.

- Choose an NTP Service Startup Policy.

- Click OK to apply the changes.

Import FortiSIEM into ESX

- Go to the Fortinet Support website https://support.fortinet.com to download the ESX package

FSM_FULL_ALL_ESX_6.3.0_Build0331.zip. See Downloading FortiSIEM Products for more information on downloading products from the support website. - Uncompress the packages for Super/Worker and Collector (using 7-Zip tool) to the location where you want to install the image. Identify the

.ovafile. - Right-click on your own host and choose Deploy OVF Template.

The Deploy OVA Template dialog box appears.

- In 1 Select an OVF

template select Local file and navigate to the

.ovafile. Click Next. If you are installing from a URL, select URL and paste the OVA URL into the field beneath URL. - In 2 Select a Name and Folder, make any needed edits to the Virtual machine name field. Click Next.

- In 3 Select a compute resource, select any needed resource from the list. Click Next.

- Review the information in 4 Review details and click Next.

- 5 License agreements. Click Next.

- In 6 Select Storage select the following, then click Next:

- A disk format from the Select virtual disk format drop-down list. Select Thin Provision.

- A VM Storage Policy from the drop-down list.

- Select Disable Storage DRS for this virtual machine, if necessary, and choose the storage DRS from the table.

- In 7 Select networks, select the source and destination networks from the drop down lists. Click Next.

- In 8 Ready to complete, review the information and click Finish.

- In the VSphere client, go to your installed OVA.

- Right-click your installed OVA (example:

FortiSIEM-611.0331.ova) and select Edit Settings > VM Options > General Options . Setup Guest OS and Guest OS Version (Linux and 64-bit). - Open the Virtual Hardware tab. Set CPU to 16 and Memory to 64GB.

- Click Add New Device and create a device.

Add additional disks to the virtual machine definition. These will be used for the additional partitions in the virtual appliance. An All In One deployment requires the following additional partitions.

Disk Size Disk Name Hard Disk 2 100GB /opt

For OPT - 100GB, the 100GB disk for /opt will consist of a single disk that will split into 2 partitions, /OPT and swap. The partitions will be created and managed by FortiSIEM when

configFSM.shruns.Hard Disk 3 60GB /cmdb Hard Disk 4 60GB /svn Hard Disk 5 60GB+ /data (see the following note) Note on Hard Disk 5:

- Add a 5th disk if using local storage in an All In One deployment. Otherwise, a separate NFS share or Elasticsearch cluster must be used for event storage.

- 60GB is the minimum event DB disk size for small deployments, provision significantly more event storage for higher EPS deployments. See the FortiSIEM Sizing Guide for additional information.

- NFS or Elasticsearch event DB storage is mandatory for multi-node cluster deployments.

After you click OK, a Datastore Recommendations dialog box opens. Click Apply.

- Do not turn off or reboot the system during deployment, which may take 7 to 10 minutes to complete. When the deployment completes, click Close.

Edit FortiSIEM Hardware Settings

- In the VMware vSphere client, select the imported Supervisor.

- Go to Edit Settings > Virtual hardware.

- Set hardware settings as in Pre-Installation Checklist. The recommended settings for the Supervisor node are:

- CPU = 16

- Memory = 64 GB

- Four hard disks:

- OS – 25GB

- OPT – 100GB

- CMDB – 60GB

- SVN – 60GB

Example settings for the Supervisor node:

- If event database is local, then choose another disk for storing event data based on your needs.

- Network Interface card

Start FortiSIEM from the VMware Console

- In the VMware vSphere client, select the Supervisor, Worker, or Collector virtual appliance.

- Right-click to open the options menu and select Power > Power On.

- Open the Summary tab for the , select Launch Web Console.

Network Failure Message: When the console starts up for the first time you may see a

Network eth0 Failedmessage, but this is expected behavior. - Select Web Console in the Launch Console dialog box.

- When the command prompt window opens, log in with the default login credentials – user:

rootand Password:ProspectHills. - You will be required to change the password. Remember this password for future use.

At this point, you can continue configuring FortiSIEM by using the GUI.

Configure FortiSIEM via GUI

Follow these steps to configure FortiSIEM by using a simple GUI.

- Log in as user

rootwith the password you set in Step 6 above. - At the command prompt, go to

/usr/local/binand enterconfigFSM.sh, for example:# configFSM.sh - In VM console, select 1 Set Timezone and then press Next.

- Select your Region, and press Next.

- Select your Country, and press Next.

- Select the Country and City for your timezone, and press Next.

- Select 1 Supervisor. Press Next.

Regardless of whether you select Supervisor, Worker, or Collector, you will see the same series of screens.

- If you want to enable FIPS, then choose 2. Otherwise, choose 1. You have the option of enabling FIPS (option 3) or disabling FIPS (option 4) later.

- Configure the IPv4 network by entering the following fields, then press Next.

Option Description Host Name

The Supervisor's host name

IPv4 Address The Supervisor's IPv4 address NetMask The Supervisor's IPv4 subnet Gateway IPv4 Network gateway address FQDN

Fully-qualified domain name

DNS1, DNS2 Addresses of the IPv4 DNS server 1 and DNS server2

- Test network connectivity by entering a host name that can be resolved by your DNS Server (entered in the previous step) and can respond to a ping. The host can either be an internal host or a public domain host like google.com. Press Next.

- The final configuration confirmation is displayed.

Verify that the parameters are correct. If they are not, then press Back to return to previous dialog boxes to correct any errors. If everything is OK, then press Run.

The options are described in the following table.

Option Description -r The FortiSIEM component being configured -z The time zone being configured -i IPv4-formatted address -m Address of the subnet mask -g Address of the gateway server used --host Host name -f FQDN address: fully-qualified domain name -t The IP type. The values can be either 4 (for ipv4) or 6 (for v6) or 64 (for both IPv4 and IPv6). Note: The 6 value is not currently supported. --dns1, --dns2 Addresses of DNS server 1 and DNS server 2. -o Installation option (install_without_fips, install_with_fips, enable_fips, or disable_fips , or change_ip )-z Time zone. Possible values are US/Pacific, Asia/Shanghai, Europe/London, or Africa/Tunis --testpinghost The URL used to test connectivity - It will take some time for this process to finish. When it is done, proceed to Upload the FortiSIEM License. If the VM fails, you can inspect the

ansible.logfile located at/usr/local/fresh-install/logsto try and identify the problem.

Upload the FortiSIEM License

|

|

Before proceeding, make sure that you have obtained valid FortiSIEM license from Forticare. For more information, see the Licensing Guide. |

You will now be asked to input a license.

- Open a Web browser and log in to the FortiSIEM UI. Use link

https://<supervisor-ip>to login. - The License Upload dialog box will open.

- Click Browse and upload the license file.

Make sure that the Hardware ID shown in the License Upload page matches the license.

- For User ID and Password, choose any Full Admin credentials.

For the first time installation, enter

adminas the user andadmin*1as the password. You will then be asked to create a new password for GUI access. - Choose License type as Enterprise or Service Provider.

This option is available only for a first time installation. Once the database is configured, this option will not be available.

- Proceed to Choose an Event Database.

Choose an Event Database

For a fresh installation, you will be taken to the Event Database Storage page. You will be asked to choose between Local Disk, NFS or Elasticsearch options. For more details, see Configuring Storage.

After the License has been uploaded, and the Event Database Storage setup is configured, FortiSIEM installation is complete. If the installation is successful, the VM will reboot automatically. Otherwise, the VM will stop at the failed task.

You can inspect the ansible.log file located at /usr/local/fresh-install/logs if you encounter any issues during FortiSIEM installation.

After installation completes, ensure that the phMonitor is up and running, for example:

# phstatus

The response should be similar to the following.

Cluster Installation

For larger installations, you can choose Worker nodes, Collector nodes, and external storage (NFS or Elasticsearch).

Install Supervisor

Follow the steps in All-in-one Install with two differences:

- Setting up hardware - you do not need an event database.

- Setting up an external Event database - configure the cluster for either NFS or Elasticsearch.

NFS

Elasticsearch

You must choose external storage listed in Choose an Event Database.

Install Workers

Once the Supervisor is installed, follow the same steps in All-in-one Install to install a Worker except only choose OS and OPT disks. The recommended CPU and memory settings for Worker node, and required hard disk settings are:

- CPU = 8

- Memory = 24 GB

- Two hard disks:

- OS – 25GB

- OPT – 100GB

For OPT - 100GB, the 100GB disk for /opt will consist of a single disk that will split into 2 partitions, /OPT and swap. The partitions will be created and managed by FortiSIEM whenconfigFSM.shruns.

Register Workers

Once the Worker is up and running, add the Worker to the Supervisor node.

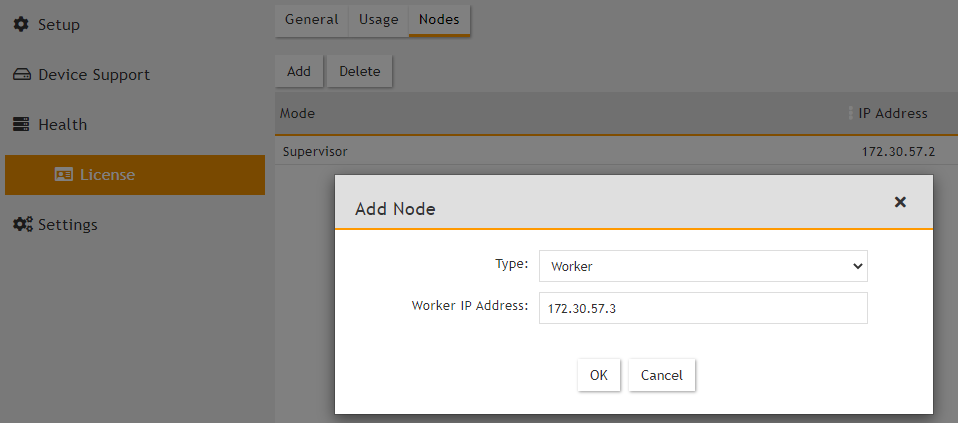

- Go to ADMIN > License > Nodes.

- Select Worker from the drop-down list and enter the Worker's IP address. Click Add.

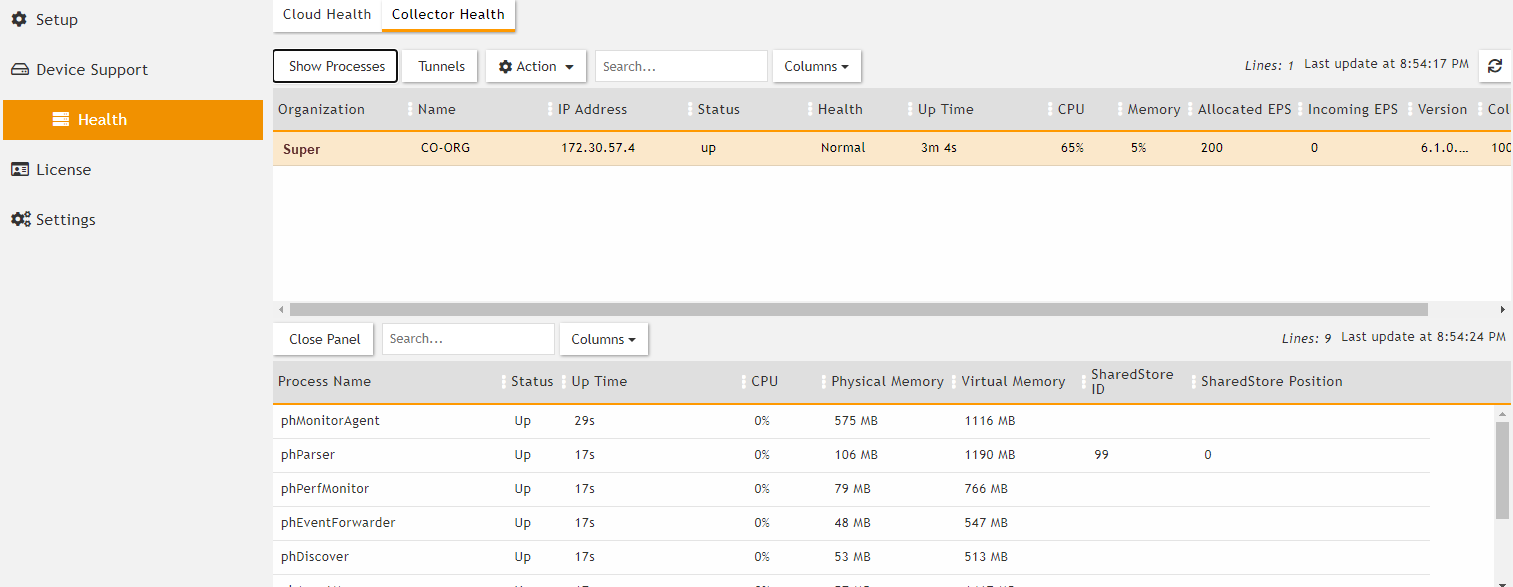

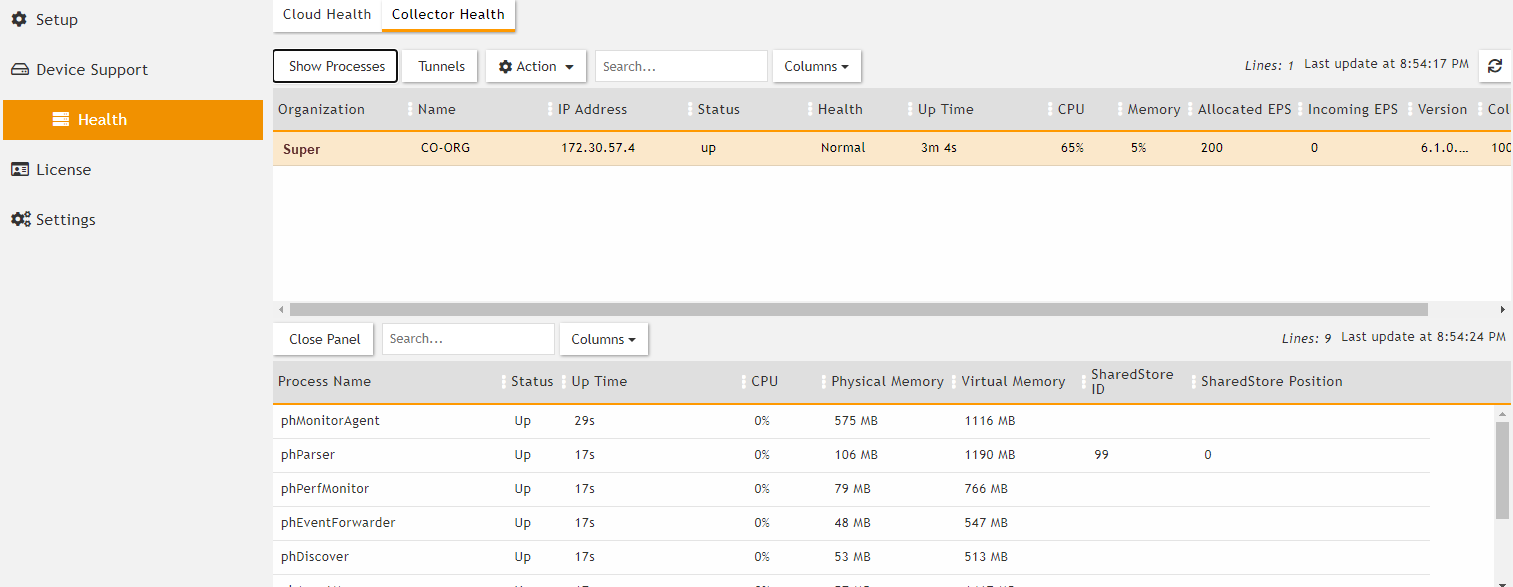

- See ADMIN > Health > Cloud Health to ensure that the Workers are up, healthy, and properly added to the system.

Install Collectors

Once Supervisor and Workers are installed, follow the same steps in All-in-one Install to install a Collector except in Edit FortiSIEM Hardware Settings, only choose OS and OPT disks. The recommended CPU and memory settings for Collector node, and required hard disk settings are:

- CPU = 4

- Memory = 8GB

- Two hard disks:

- OS – 25GB

- OPT – 100GB

For OPT - 100GB, the 100GB disk for /opt will consist of a single disk that will split into 2 partitions, /OPT and swap. The partitions will be created and managed by FortiSIEM whenconfigFSM.shruns.

Register Collectors

Collectors can be deployed in Enterprise or Service Provider environments.

Enterprise Deployments

For Enterprise deployments, follow these steps.

- Log in to Supervisor with 'Admin' privileges.

- Go to ADMIN > Settings > System > Event Worker.

- Enter the IP of the Worker node. If a Supervisor node is only used, then enter the IP of the Supervisor node. Multiple IP addresses can be entered on separate lines. In this case, the Collectors will load balance the upload of events to the listed Event Workers.

Note: Rather than using IP addresses, a DNS name is recommended. The reasoning is, should the IP addressing change, it becomes a matter of updating the DNS rather than modifying the Event Worker IP addresses in FortiSIEM. - Click OK.

- Enter the IP of the Worker node. If a Supervisor node is only used, then enter the IP of the Supervisor node. Multiple IP addresses can be entered on separate lines. In this case, the Collectors will load balance the upload of events to the listed Event Workers.

- Go to ADMIN > Setup > Collectors and add a Collector by entering:

- SSH to the Collector and run following script to register Collectors:

# /opt/phoenix/bin/phProvisionCollector --add <user> '<password>' <Super IP or Host> <Organization> <CollectorName>The password should be enclosed in single quotes to ensure that any non-alphanumeric characters are escaped.

- Set

userandpasswordusing the admin user name and password for the Supervisor. - Set

Super IP or Hostas the Supervisor's IP address. - Set

Organization. For Enterprise deployments, the default name is Super. - Set

CollectorNamefrom Step 2a.The Collector will reboot during the Registration.

- Set

- Go to ADMIN > Health > Collector Health for the status.

Service Provider Deployments

For Service Provider deployments, follow these steps.

- Log in to Supervisor with 'Admin' privileges.

- Go to ADMIN > Settings > System > Event Worker.

- Enter the IP of the Worker node. If a Supervisor node is only used, then enter the IP of the Supervisor node. Multiple IP addresses can be entered on separate lines. In this case, the Collectors will load balance the upload of events to the listed Event Workers.

Note: Rather than using IP addresses, a DNS name is recommended. The reasoning is, should the IP addressing change, it becomes a matter of updating the DNS rather than modifying the Event Worker IP addresses in FortiSIEM. - Click OK.

- Enter the IP of the Worker node. If a Supervisor node is only used, then enter the IP of the Supervisor node. Multiple IP addresses can be entered on separate lines. In this case, the Collectors will load balance the upload of events to the listed Event Workers.

- Go to ADMIN > Setup > Organizations and click New to add an Organization.

- Enter the Organization Name, Admin User, Admin Password, and Admin Email.

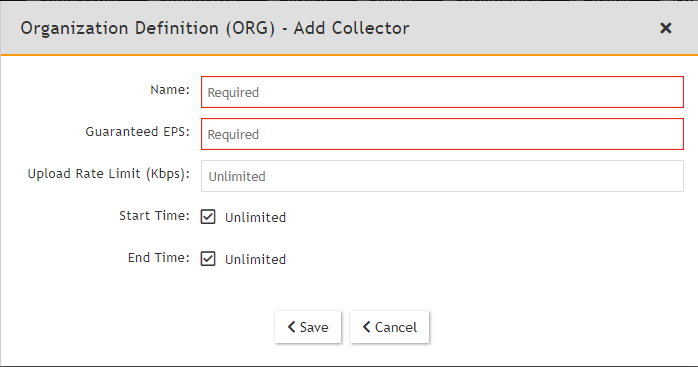

- Under Collectors, click New.

- Enter the Collector Name, Guaranteed EPS, Start Time, and End Time.

The last two values could be set as Unlimited. Guaranteed EPS is the EPS that the Collector will always be able to send. It could send more if there is excess EPS available.

- SSH to the Collector and run following script to register Collectors:

# /opt/phoenix/bin/phProvisionCollector --add <user> '<password>' <Super IP or Host> <Organization> <CollectorName>The password should be enclosed in single quotes to ensure that any non-alphanumeric characters are escaped.

- Set

userandpasswordusing the admin user name and password for the Organization that the Collector is going to be registered to. - Set

Super IP or Hostas the Supervisor's IP address. - Set

Organizationas the name of an organization created on the Supervisor. - Set

CollectorNamefrom Step 6.

The Collector will reboot during the Registration.

- Set

- Go to ADMIN > Health > Collector Health and check the status.

Installing on ESX 6.5

Importing a 6.5 ESX Image

When installing with ESX 6.5, or an earlier version, you will get an error message when you attempt to import the image.

To resolve this import issue, you will need to take the following steps:

-

Install 7-Zip.

-

Extract the OVA file into a directory.

-

In the directory where you extracted the OVA file, edit the file

FortiSIEM-VA-6.3.0.0331.ovf, and replace all references tovmx-15with your compatible ESX hardware version shown in the following table.

Note: For example, for ESX 6.5, replacevmx-15withvmx-13.

Note: For example, for ESX 6.5, replace

vmx-15withvmx-13.Compatibility Description EXSi 6.5 and later This virtual machine (hardware version 13) is compatible with ESXi 6.5. EXSi 6.0 and later This virtual machine (hardware version 11) is compatible with ESXi 6.0 and ESXi 6.5. EXSi 5.5 and later

This virtual machine (hardware version 10) is compatible with ESXi 5.5, ESXi 6.0, and ESXi 6.5. EXSi 5.1 and later

This virtual machine (hardware version 9) is compatible with ESXi 5.1, ESXi 5.5, ESXi 6.0, and ESXi 6.5. EXSi 5.0 and later

This virtual machine (hardware version 8) is compatible with ESXI 5.0, ESXi 5.1, ESXi 5.5, ESXi 6.0, and ESXi 6.5. ESX/EXSi 4.0 and later

This virtual machine (hardware version 7) is compatible with ESX/ESXi 4.0, ESX/ESXi 4.1, ESXI 5.0, ESXi 5.1, ESXi 5.5, ESXi 6.0, and ESXi 6.5.

EXS/ESXi 3.5 and later

This virtual machine (hardware version 4) is compatible with ESX/ESXi 3.5, ESX/ESXi 4.0, ESX/ESXi 4.1, ESXI 5.1, ESXi 5.5, ESXi 6.0, and ESXi 6.5. It is also compatible with VMware Server 1.0 and later. ESXi 5.0 does not allow creation of virtual machines with ESX/ESXi 3.5 and later compatibility, but you can run such virtual machines if they were created on a host with different compatibility.

ESX Server 2.x and later

This virtual machine (hardware version 3) is compatible with ESX Server 2.x, ESX/ESXi 3.5, ESX/ESXi 4.0, ESX/ESXi 4.1, and ESXI 5.0. You cannot create, edit, turn on, clone, or migrate virtual machines with ESX Server 2.x compatibility. You can only register or upgrade them.

Note: For more information, see here.

-

Right click on your host and choose Deploy OVF Template. The Deploy OVA Template dialog box appears.

-

In 1 Select an OVF template, select Local File.

-

Navigate to the folder with the OVF file.

-

Select all the contents that are included with the OVF.

-

Click Next.

Resolving Disk Save Error

You may encounter an error message asking you to select a valid controller for the disk if you attempt to add an additional 4th disk (/opt, /cmd, /svn, and /data). This is likely due to an old IDE controller issue in VMware, where you are normally limited to 2 IDE controllers, 0, 1, and 2 disks per controller (Master/Slave).

If you are attempting to add 5 disks in total, such as this following example, you will need to take the following steps:

| Disk | Usage |

| 1st | 25GB default for image |

| 2nd | 100GB for /optFor OPT - 100GB, the 100GB disk for /opt will consist of a single disk that will split into 2 partitions, /OPT and swap. The partitions will be created and managed by FortiSIEM when |

|

3rd |

60GB for |

|

4th |

60GB for |

|

5th |

75GB for |

-

Go to Edit settings, and add each disk individually, clicking save after adding each disk.

When you reach the 4th disk, you will receive the "Please select a valid controller for the disk" message. This is because the software has failed to identify the virtual device node controller/Master or Slave for some unknown reason. -

Expand the disk setting for each disk and review which IDE Controller Master/Slave slots are in use. For example, in one installation, there may be an attempt for the 4th disk to be added to IDE Controller 0 when the Master/Slave slots are already in use. In this situation, you would need to put the 4th disk on IDE Controller 1 in the Slave position, as shown here. In your situation, make the appropriate configuration setting change.

-

Click save to ensure your work has been saved.

Adding a 5th Disk for /data

When you need to add a 5th disk, such as for /data, and there is no available slot, you will need to add a SATA controller to the VM by taking the following steps:

-

Go to Edit settings.

-

Select Add Other Device, and select SCSI Controller (or SATA).

You will now be able to add a 5th disk for /data, and it should default to using the additional controller. You should be able to save and power on your VM. At this point, follow the normal instructions for installation.

Note: When adding the local disk in the GUI, the path should be /dev/sda or /dev/sdd. You can use one of the following commands to locate: # fdisk-l

or# lsblk

Install Log

The install ansible log file is located here: /usr/local/fresh-install/logs/ansible.log.

Errors can be found at the end of the file.