Configuring Disaster Recovery

Assume there are two sites: Site 1 needs to be set up as Primary, and Site 2 as Secondary. Site 2 is the DR Site.

Requirements for Successful DR Implementation

-

Two separate FortiSIEM licenses - one for each site.

-

The installation at both sites must be identical - workers, storage type, archive setup, hardware resources (CPU, Memory, Disk) of the FortiSIEM nodes.

-

All appliances (Supervisors and Workers) must have the same version of firmware.

-

DNS Names are used for the Supervisor nodes at the two sites. Make sure that users, collectors, and agents can access both Supervisor nodes by their DNS names.

-

DNS Names are used for the Worker upload addresses.

-

TCP Ports for HTTPS (TCP/443), SSH (TCP/22), PostgresSQL (TCP/5432), and Private SSL Communication port between phMonitor (TCP/7900) are open between both sites.

Disaster Recovery Site Setup

-

Set up DR Site with 1 Supervisor and identical number of Workers as Primary Site. Configure disks on the Workers in the same way as in the Primary Site.

-

Apply separate FortiSIEM License.

Configuration

Take the following steps to configure Disaster Recovery.

Step 1. Collect UUID and SSH Public Key from Primary (Site 1)

-

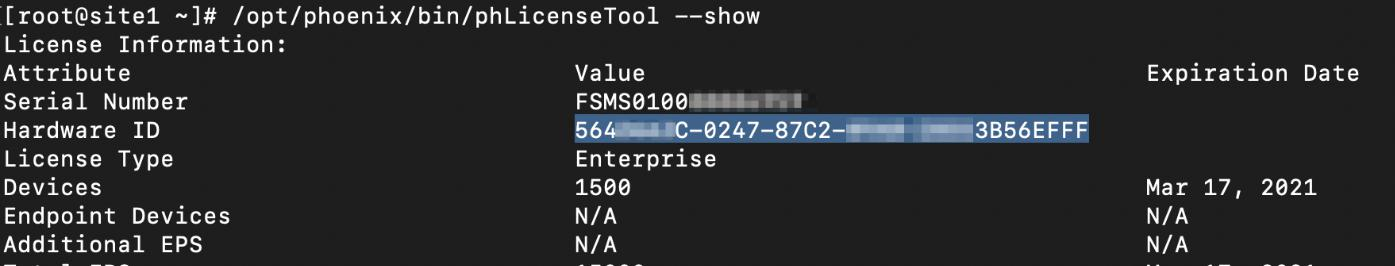

For the UUID, obtain the Hardware ID value through an SSH session by running the following command on Site 1.

/opt/phoenix/bin/phLicenseTool --showFor example:

-

Enter/paste the Hardware ID into the UUID field for the Site 1 FortiSIEM.

-

Under Configuration and Profile Replication, generate the SSH Public Key and SSH Private Key Path by entering the following in your SSH session from Site 1:

su – admin

ssh-keygen -t rsa -b 4096

Leave the file location as default, and press enter at the passphrase prompt.

The output will appear similar to the following:

Generating public/private rsa key pair.

Enter file in which to save the key (/opt/phoenix/bin/.ssh/id_rsa):

Created directory '/opt/phoenix/bin/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /opt/phoenix/bin/.ssh/id_rsa.

Your public key has been saved in /opt/phoenix/bin/.ssh/id_rsa.pub.

The key fingerprint is:

a9:43:88:d1:ed:b0:99:b5:bb:e7:6d:55:44:dd:3e:48 admin@site1.fsmtesting.com

The key's randomart image is:

+--[ RSA 4096]----+

| ....|

| . . E. o|

-

For the SSH Public Key enter the following command, and copy all of the output.

cat /opt/phoenix/bin/.ssh/id_rsa.pub

Step 2. Collect UUID and SSH Public Key from Secondary (Site 2)

-

On the Site 2 FortiSIEM node, SSH as root.

-

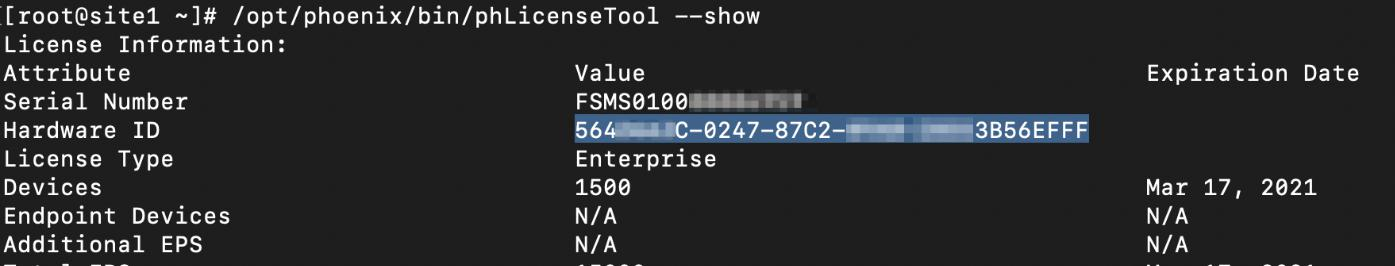

Run the following command to get the Hardware ID, also known as the UUID. Record this Site 2 Hardware ID, as you will need it later.

/opt/phoenix/bin/phLicenseTool --show

-

Generate a public key for Site 2 by running the following commands.

su – admin

ssh-keygen -t rsa -b 4096

Leave the file location as default, and press enter at the passphrase prompt. Your output will appear similar to the following.

Generating public/private rsa key pair.

Enter file in which to save the key (/opt/phoenix/bin/.ssh/id_rsa):

Created directory '/opt/phoenix/bin/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /opt/phoenix/bin/.ssh/id_rsa.

Your public key has been saved in /opt/phoenix/bin/.ssh/id_rsa.pub.

The key fingerprint is:

a9:43:88:d1:ed:b0:99:b5:bb:e7:6d:55:44:dd:3e:48 admin@site2.fsmtesting.com

The key's randomart image is:

+--[ RSA 4096]----+

| ....|

| . . E. o|

-

Enter the following command, and copy all of the output.

cat /opt/phoenix/bin/.ssh/id_rsa.pub

You will use the output as your SSH Public Key for Site 2 in later set up. -

Exit the admin user in the SSH session by entering the following command:

exit

Step 3. Set up Disaster Recovery on Primary (Site 1)

-

Navigate to ADMIN > License > Nodes.

-

Click Add.

-

On the Add Node window, in the Mode drop-down list, select Secondary (DR).

The primary (Site 1) node configuration fields appear in the left column, and the secondary (Site 2) node configuration fields appear in the right column. -

Under the Host Info Role Primary column, take the following steps:

-

In the Host field, enter the host name of the Site 1 FortiSIEM.

-

In the IP field, enter the IP of the Site 1 FortiSIEM.

-

In the SSH Public Key field, enter/paste the SSH Public Key of the Site 1 FortiSIEM that you obtained earlier.

-

For the SSH Private Key Path, enter the following into the field:

/opt/phoenix/bin/.ssh/id_rsa -

For Replication Frequency, select a value for the Site 1 FortiSIEM. This value is used for SVN and ProfileDB synchronization. The recommended value is 15 minutes.

-

-

Under the Host Info Role Secondary column, take the following steps:

-

In the Host field, enter the host name of the Site 2 FortiSIEM.

-

In the IP field, enter the IP address of the Site 2 FortiSIEM.

-

In the UUID field, enter/paste the Hardware ID of the Site 2 FortiSIEM that you obtained earlier.

-

In the SSH Public Key field, enter/paste the SSH Public Key of the Site 2 FortiSIEM that you obtained earlier.

-

For the SSH Private Key Path, enter the following into the field:

/opt/phoenix/bin/.ssh/id_rsa -

Click Export and download a file named

replicate.json.

Note: This file contains all of the Disaster Recovery settings, and can be used as a backup.

-

-

Click Save.

At this point, the Site 1 (Primary) node will begin configuration and the step and progress of the Disaster Recovery is displayed in the GUI.

When completed, the message "Replicate Settings applied." will appear.

Step 4. Check Service Status on Primary and Secondary

On the Primary node, all FortiSIEM ph* services will be in an "up" state.

On the Secondary node, most ph* services will be "down" except for phQueryMaster, phQueryWorker, phDataPurger, and phMonitor.

This can be seen in the following images. They illustrate the Primary Node and Secondary Node after a full CMDB sync:

On the Secondary node, all backend processes should be down on the Supervisor and Workers except for phQueryMaster, phQueryWorker, DataPurger, DBServer, and AppServer.

Step 5. Cleanup ClickHouse Configuration on Secondary (Site 2)

Follow these steps:

-

Logon to the Secondary Supervisor.

-

Run the following commands.

/opt/phoenix/phscripts/clickhouse/cleanup_clickhouse_keeper.sh/opt/phoenix/phscripts/clickhouse/cleanup_clickhouse.sh

Step 6. Configure Combined ClickHouse from Primary (Site 1)

|

|

Pre-requisite: Change file permissions if Primary is upgraded from an older version of FortiSIEM earlier than 6.7.5 by taking the following steps.

|

Follow these steps:

-

Logon to the Primary Supervisor.

-

Go to ADMIN > Settings > Database > ClickHouse Config.

-

Add the Secondary Workers to the appropriate ClickHouse Keeper and Data Clusters.

If there are no Secondary Workers, the Secondary Supervisor will be added instead.

-

If the Secondary Worker was a Keeper, add it to the Keeper list.

-

If the Secondary Worker was also a Data node, add it to the same shard as a replica.

-

If the Secondary Worker was both ClickHouse Keeper and ClickHouse Data node, add to both.

-

-

Click Test and Save.

Step 7. View Replication Health

Replication progress is available by navigating to ADMIN > Health > Replication Health. For details see here.