Migrating from FortiSIEM 5.3.x or 5.4.0

Migration limitations: If migrating from 5.3.3 or 5.4.0 to 6.1.1, please be aware that the following features will not be available after migration.

-

Pre-compute feature

-

Elastic Cloud support

If any of these features are critical to your organization, then please wait for a later version where these features are available after migration.

This section describes how to migrate from FortiSIEM

Pre-Migration Checklist

To perform the migration, the following prerequisites must be met:

- Ensure that your system can connect to the network. You will be asked to provide a DNS Server and a host that can be resolved by the DNS Server and responds to ping. The host can either be an internal host or a public domain host like google.com.

- Delete the Worker from the Super GUI.

- Stop/Shutdown the Worker.

- Note the

/svnpartition by running thedf -hcommand. the partition is used to mount/svn/53x-settings. You will need this information for a later step. - Create a

/svn/53x-settingsdirectory and symlink it to/images. The/svnpartition should have at enough space to hold/opt/phoenixfrom your current system. Typically, 10 GB is enough. See the following example:

Migrate All-in-one Installation

- Download the Backup Script

- Run the Backup Script and Shutdown

- Create 6.1.1 New Root Disk

- Swap 6.1.1 OS Disk on Your 5.3.x or 5.4.x Instance

- Boot Up the

5.3.x or 5.4.0 Instance and Migrate to 6.1.1

Download the Backup Script

Download FortiSIEM Azure backup script to start migration. Follow these steps:

- # Download the file

FSM_Backup_5.3_Files_6.1.1_Build0118file from the support site and copy it to the5.3.x or 5.4.0 Azure instance that you are planning to migrate to 6.1.1 (for example,/svn/53x-settings). - Unzip the

.zipfile, for example:# unzip FSM_Backup_5.3_Files_6.1.1_Build0118.zip

Run the Backup Script and Shutdown System

Follow these steps to run the backup script and shut down the system:

- Go to the directory where you downloaded the backup script, for example:

# cd /svn/53x-settings/FSM_Backup_5.3_Files_6.1.1_Build0118

- Run the backup script with the

sh backupcommand to backup 5.3.x or 5.4.x settings that will be migrated later into the new 6.1.1 OS. For example:# sh backup

- Run the

shutdowncommand to shut down the FortiSIEM instance, for example:# shutdown -h now

Create 6.1.1 New Root Disk

Follow these steps to create a new 6.1.1 root disk from the Azure portal.

- Log in to Azure portal, select Home > Disks service and then click Add.

- Fill in the disk details and choose Storage blob as the Source type and find 6.1.1 OS VHD (refer to earlier section on how to upload this VHD).

Note: The root disk must be 25GB, and the size must not be changed. - Click Review + Create after filling in the rest of the details if necessary.

- Verify that of the details are correct, then click Create.

- Wait for the deployment to complete. Click Go to resource and note the name of the resource.

Swap 6.1.1 OS Disk on Your 5.3.x or 5.4.0 Instance

Follow these steps to swap OS disk from the

- Navigate to the

5.3.x or 5.4.0 VM and navigate to Disks, which is located in the side bar. Click Swap OS disk.

- Choose the 6.1.1 root disk you just created, fill in the confirmation box and click OK.

- Wait for the OS disk swap complete notification to appear.

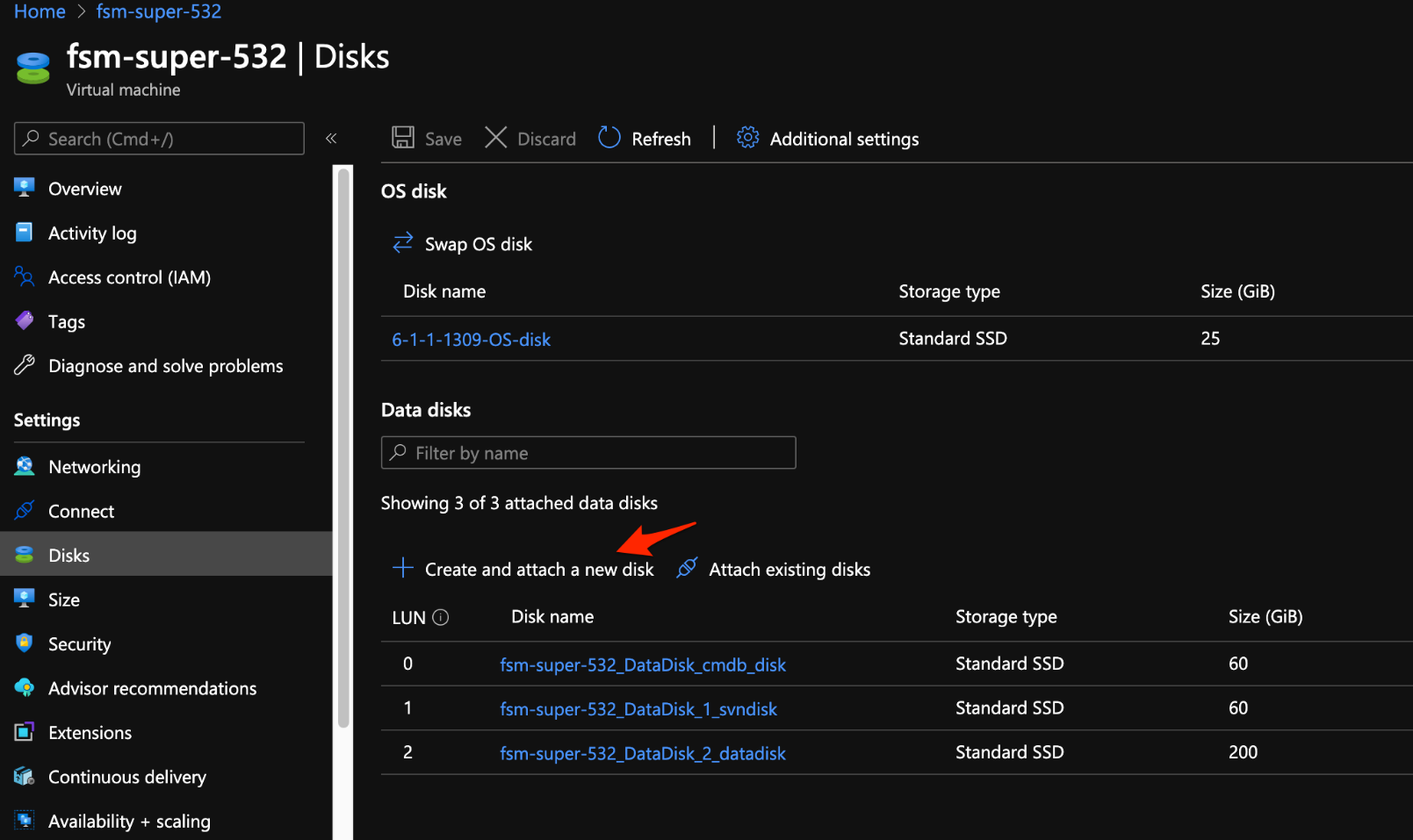

- Navigate to the VM Overview, then click Disks in the sidebar. Click Create and attach a new disk.

- Add a 100 GiB opt disk and click OK

- On the Disks page, select Read-only under Host caching, and click Save.

Boot up the 5.3.x or 5.4.0 Instance and Migrate to 6.1.1

Follow these steps to complete the migration process:

- Navigate to the VM Overview, click Refresh, and Start the virtual machine.

- At the end of booting, log in with the default login credentials: User:

rootand Password:ProspectHills. You must userootto login to the system after booting up the 6.1.1 OS disk. The5.3.x or 5.4.0 configure user name can not be used to login to the system. - You will be required to change the password. Remember this password for future use.

- Use the

/svnpartition noted earlier and mount it to/mnt. This contains the backup of the5.3.x or 5.4.0 system settings that will be used during migration. Copy the5.3.x or 5.4.0 settings that were previously backed up and umount/mnt. For example:# mount /dev/sdb1 /mnt

# mkdir /restore-53x-settings

# cd /restore-53x-settings

# rsync -av /mnt/53x-settings/ .

# ln -sf /restore-53x-settings /images

# umount /mnt

- Run the command

configFSM.shscript to open the configuration GUI:- Select 2 No in the Configure TIMEZONE dialog and then click Next.

- In Config Target, select your node type: Supervisor, Worker, or Collector. This step is usually performed on Supervisor (1 Supervisor). Click Next.

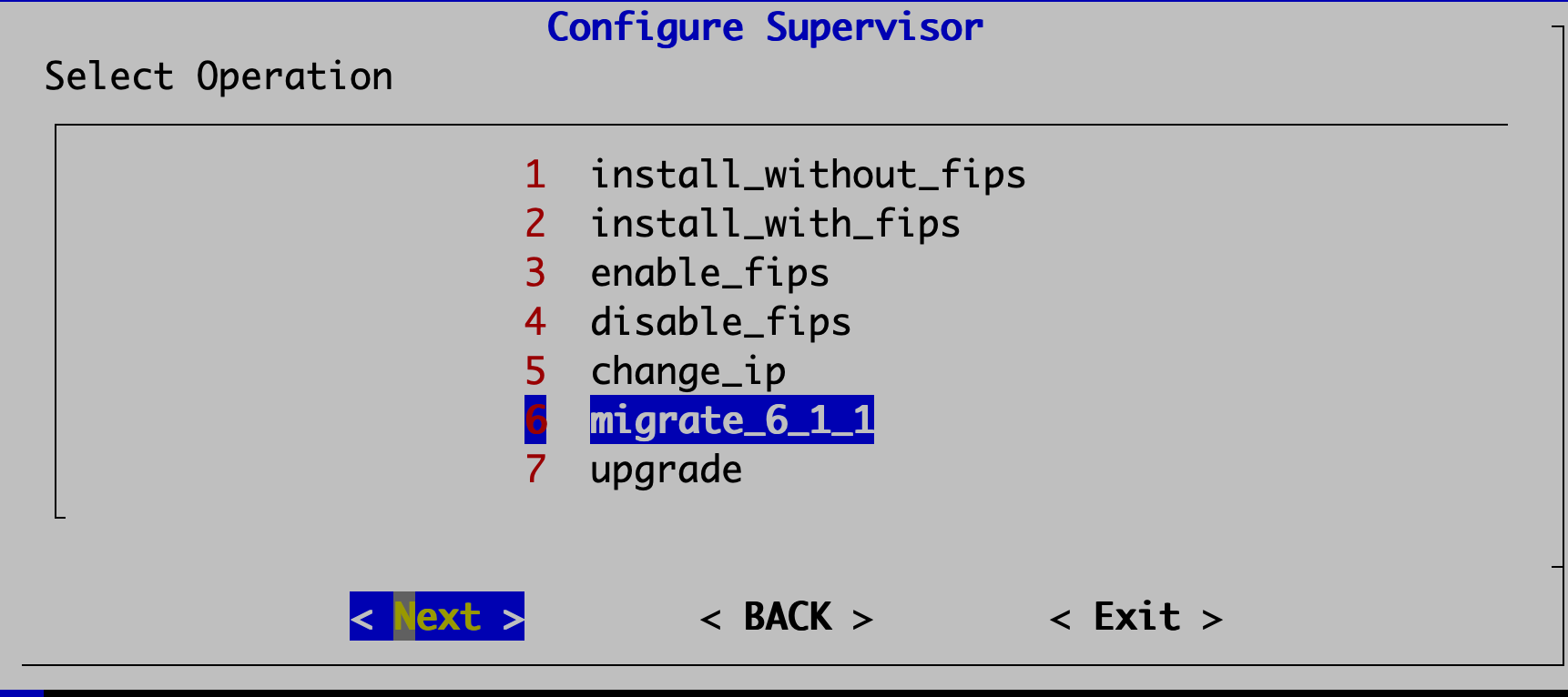

- In the Configure Supervisor, select the 6 migrate_6_1_1 operation and then click Next.

- Test network connectivity by entering a host name that can be resolved by your DNS Server (entered in the previous step) and responds to ping. The host can either be an internal host or a public domain host like google.com. In order for the migration to complete, the system still needs https connectivity to FortiSIEM OS update servers

– os-pkgs-cdn.fortisiem.fortinet.comandos-pkgs-c8.fortisiem.fortinet.com. Click Next.

- Run on the confirmation page once you make sure all the values are correct. The options are described in the table

here.

- Wait for the operations to complete and the system to reboot.

- Wait for about 2 minutes before logging into the system. Wait another 5-10 minutes for all of the processes to start up. Then, execute the

phstatuscommand to see the status of FortiSIEM processes.

- Remove the restored settings directories because you no longer need them, for example:

# rm -rf /restore-53x-settings

# rm -rf /svn/53x-settings

# rm -f /images

- Select 2 No in the Configure TIMEZONE dialog and then click Next.

Migrate Cluster Installation

This section provides instructions on how to migrate Supervisor, Workers, and Collectors separately in a cluster environment,

- Delete Workers

- Migrate Supervisor

- Install New Worker(s)

- Register Workers

- Set Up Collector-to-Worker Communication

- Working with Pre-6.1.0 Collectors

- Install 6.1.1 Collectors

- Register 6.1.1 Collectors

Delete Workers

- Login to the Supervisor.

- Go to Admin > License > Nodes and delete the Workers one-by-one.

- Go to the Admin > Cloud Health page and make sure that the Workers are not present.

Note that the Collectors will buffer events while the Workers are down.

- Shutdown the Workers.

SSH to the Workers one-by-one and shutdown the Workers.

Migrate Supervisor

Follow the steps in Migrate All-in-one Installation to migrate the supervisor node. Note: FortiSIEM 6.1 does not support Worker or Collector migration.

Install New Worker(s)

Follow the steps in Cluster Installation > Install Workers to install new Workers. You can either keep the same IP address or change the address.

Register Workers

Follow the steps in Cluster Installation > Register Workers to register the newly created 6.1.1 Workers to the 6.1.1 Supervisor. The 6.1.1 FortiSIEM Cluster is now ready.

Set Up Collector-to-Worker Communication

- Go to Admin > Systems > Settings.

- Add the Workers to the Event Worker or Query Worker as appropriate.

- Click Save.

Working with Pre-6.1.0 Collectors

Pre-6.1.0 Collectors and agents will work with 6.1.1 Supervisor and Workers. You can install 6.1.1 collectors at your convenience.

Install 6.1.1 Collectors

FortiSIEM does not support Collector migration to 6.1.1. You can install new 6.1.1 Collectors and register them to 6.1.1 Supervisor in a specific way so that existing jobs assigned to Collectors and Windows agent associations are not lost. Follow these steps:

- Copy the http hashed password file (

/etc/httpd/accounts/passwds) from the old Collector. - Disconnect the pre-6.1.1 Collector.

- Install the 6.1.1 Collector with the old IP address by the following the steps in Cluster Installation > Install Collectors.

- Copy the saved http hashed password file (

/etc/httpd/accounts/passwds) from the old Collector to the 6.1.1 Collector. This step is needed for Agents to work seamlessly with 6.1.1 Collectors. The reason for this step is that when the Agent registers, a password for Agent-to-Collector communication is created and the hashed version is stored in the Collector. During 6.1.1 migration, this password is lost.

Register 6.1.1 Collectors

Follow the steps in Cluster Installation > Register Collectors, with the following difference: in the phProvisionCollector command, use the --update option instead of --add. Other than this, use the exactly the same parameters that were used to register the pre-6.1.1 Collector. Specifically, use this form of the

phProvisionCollector command to register a 6.1.1 Collector and keep the old associations:

# /opt/phoenix/bin/phProvisionCollector --update <user> '<password>' <Super IP or Host> <Organization> <CollectorName>

The password should be enclosed in single quotes to ensure that any non-alphanumeric characters are escaped.

Re-install new Windows Agents with the old InstallSettings.xml file. Both the migrated and the new agents will work. The new Linux Agent and migrated Linux Agent will also work.