Data backup

FortiAnalyzer-BigData supports disaster recovery and data portability. You can back up all the data within a Storage Group to Hadoop Distributed File System (HDFS) in Parquet file format.

To back up data:

- From the Data page, locate the storage group you want to back up and select Actions > Backup.

The Backup Storage Group Configuration dialog loads with the following fields:

Field name

Description

HDFS Url

Defines the target directory of the HDFS cluster. By default, the field is set to the built-in HDFS in the Security Event Manager.

If the URL is configured to an external HDFS cluster, all its hosts must be made accessible by the Security Event Manager hosts (see Backup and restore to external HDFS).

Clean Previous Backup Data

Enable to delete any previous backup data and start a new backup.

Do not enable if you want to create an incremental backup.

Backup Timeout

Enter the number of hours before the backup job times out. After the timeout, the job will abort.

Enable Safe Mode

By default, the normal backup job processes multiple tables in parallel and ignore any intermediate errors. Enable Safe Mode to back up the Storage Group tables sequentially and to fail early if any error occurs.

This mode may take longer to complete the back up, so only enable Safe Mode when the normal backup job fails.

Advanced Config

These configurations define the resources used for the job. Normal users should keep the default configurations.

Enable Scheduled Backup

Enable so the backup can be scheduled automatically.

- When you are finished, click Save & Backup to begin the backup process.

- You can monitor the status of your backup by navigating to Jobs > Storage Group Backup.

Incremental backups

We recommend that you create incremental backups by consistently backing up new data to the same HDFS directory.

The first time a backup job is run, a full backup of the storage group data will be saved to the HDFS directory. Subsequent runs will perform incremental backups which only contain the rows that have changed since the initial full backup. Thus, the subsequent backups will be faster and more efficient.

To create manual incremental backups:

If you have already created a previous backup, you can manually create an incremental backup against it.

- From the navigation bar, go Jobs and click Storage Group Backup to view all the completed backups.

- Select the backup which you want to create an incremental backup against and click View Config.

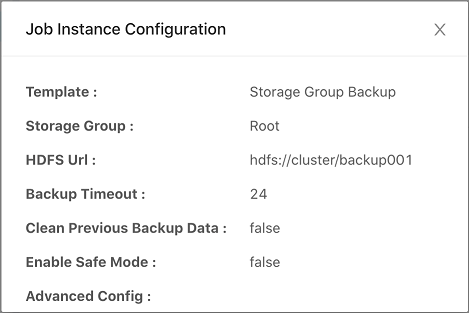

The Job Instance Configuration dialog loads with the following fields:

- In the HDFS Url field, copy the URL.

For example: hdfs://cluster/backup/7o7T - Go to Data and select the same Storage Group as the previous backup, and click Actions > Backup.

- In the HDFS URL field, paste in the HDFS Url copied from step 3.

You can check the number of existing backups in the Backup Storage Group Configuration dialog.

- Ensure the Clean Previous Backup Data option is disabled so you do not clean any previous backup data, allowing this backup to be incremental.

You can enable this option to make a full backup to the HDFS directory, however, a full backup job will be more time consuming than an incremental backup.

- When you are finished, click Save & Backup to begin the backup process.

To create scheduled incremental backups:

You can also schedule incremental backup jobs by enabling the Enable Scheduled Backup option. This schedules incremental backup jobs to the HDFS you set. Fortinet strongly recommends scheduling maintenance jobs at off-peak hours.